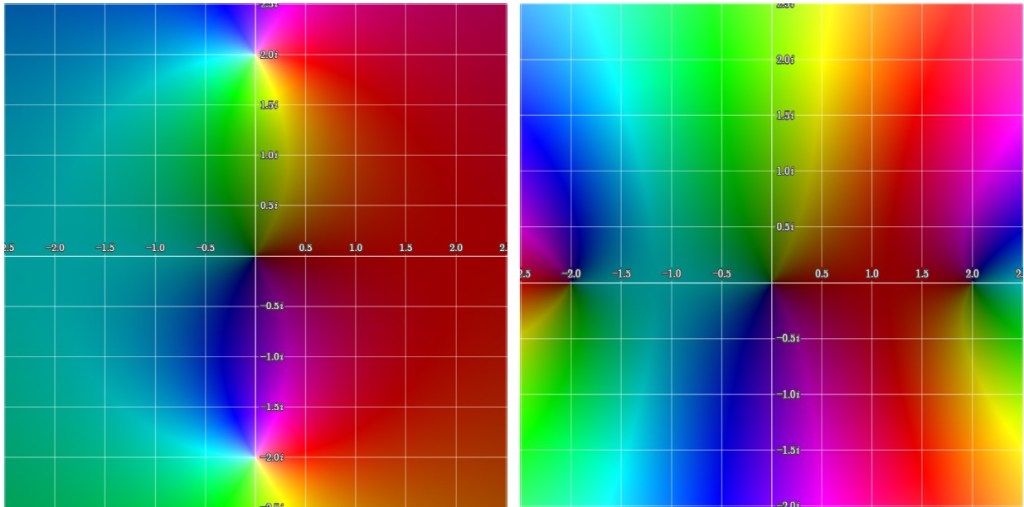

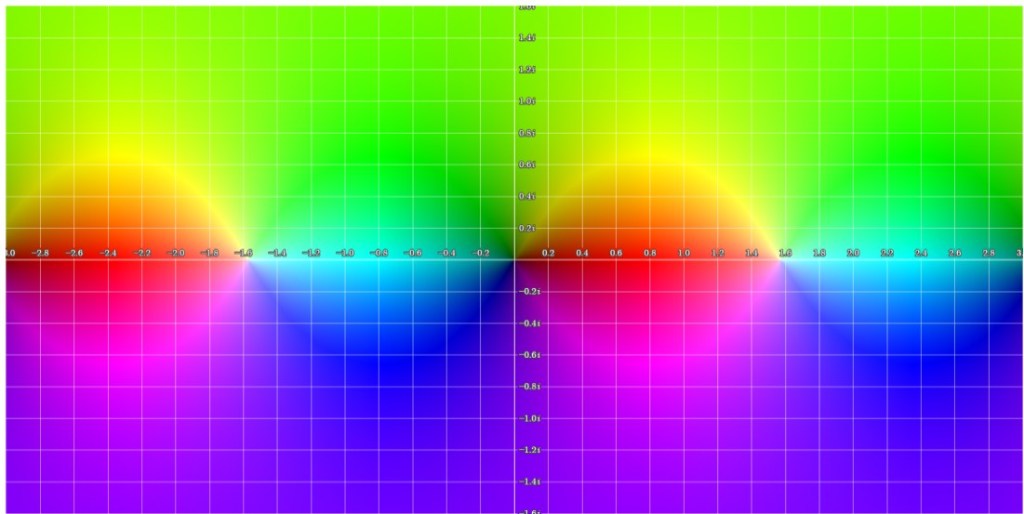

A well-known continued fraction representation of is:

Using the procedure described in the previous post, we can show that the near-diagonal Padé coefficients presented in this post can be converted to a sequence of truncated fractions corresponding to the continued fraction above (see figures below).

|

|

|

|

|

|

|

|---|---|---|---|---|---|

|

|

|

|

… | ||

|

|

|

|

… | ||

|

|

|

|

… | ||

|

|

… | ||||

| … | … | … | … | … | … |

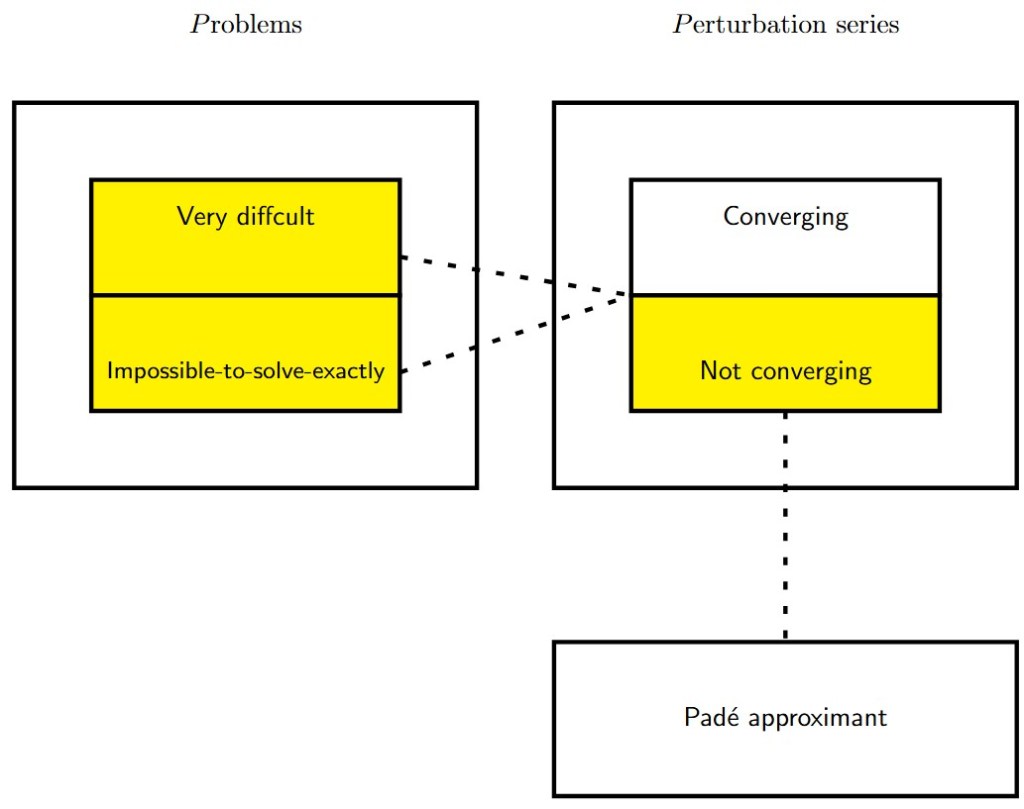

Caption: Padé Approximants P(m,n) for expressed as continued fractions.

The relationship between Padé approximants and continued fractions is a profound connection in mathematical analysis, particularly for approximating functions like . Padé approximants, which are rational functions that match the Taylor series of a function up to a specified order, can often be expressed as continued fractions. This representation is advantageous because continued fractions can provide better convergence properties for certain functions, especially near singularities. For instance, the near-diagonal Padé approximants for

, as shown above, can be systematically converted into a sequence of truncated continued fractions, revealing a structured pattern known as the “main Padé sequence.”

A function with a convergent Taylor series around can be approximated by a sequence of diagonal Padé approximants

, provided the associated Hankel matrices

, built from the Taylor coefficients, have nonzero determinants.

When this “Padé table” is normal (i.e., for all

), each Padé approximant is uniquely defined.

Under these conditions, one can systematically derive a continued fraction whose successive convergents exactly match the Padé approximants.

This establishes a rigorous connection between the Taylor series, Padé approximation, and continued fraction representation of the function.