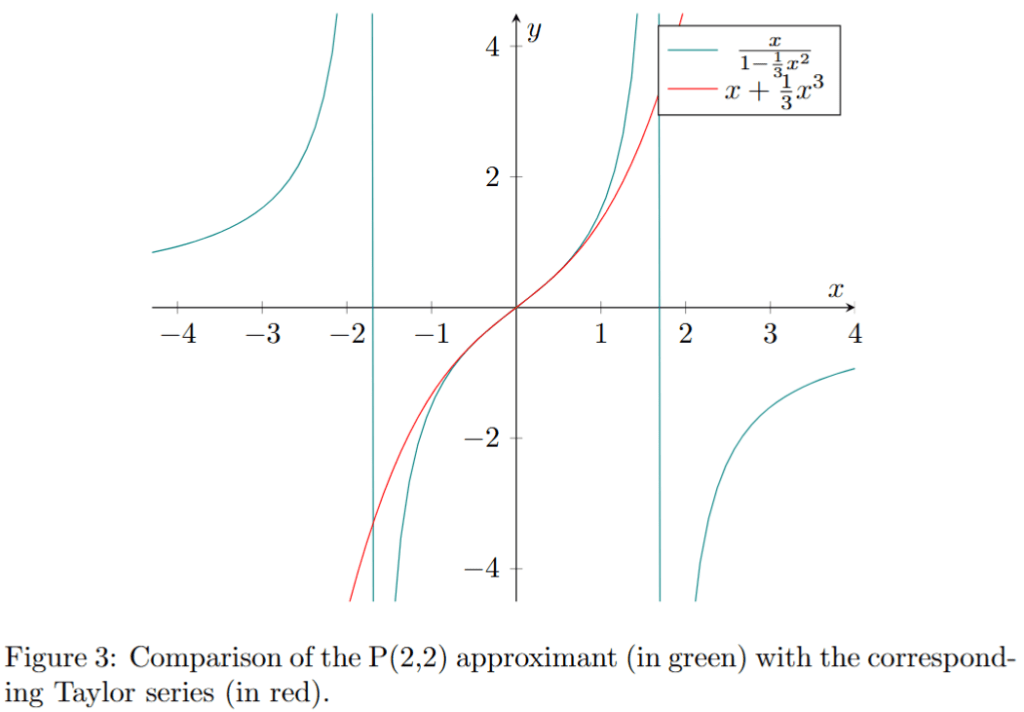

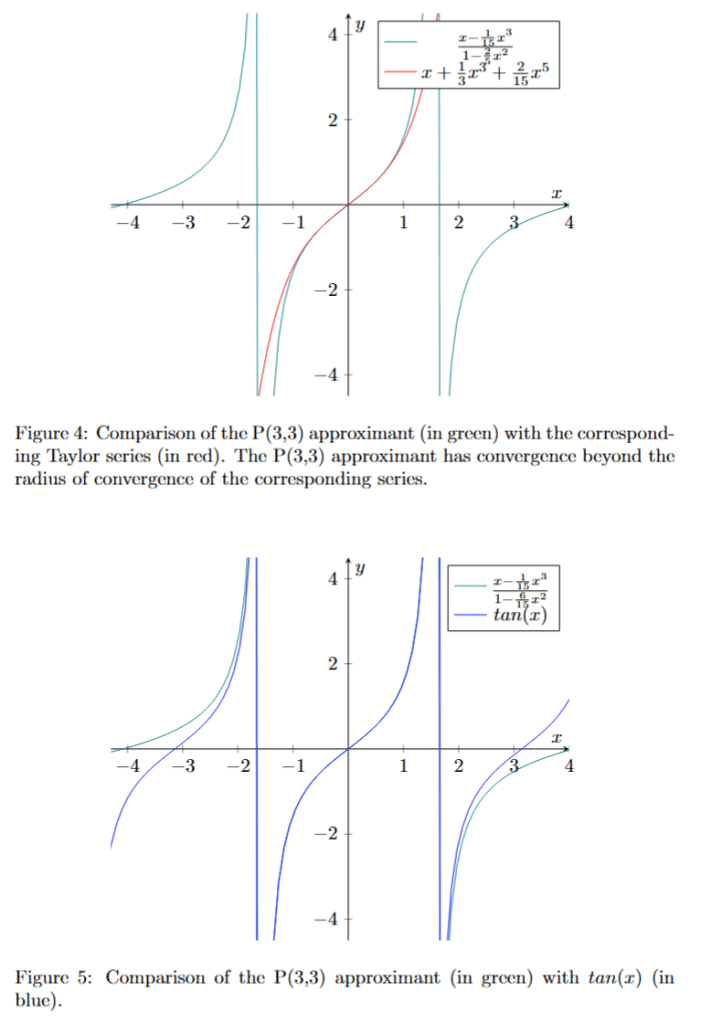

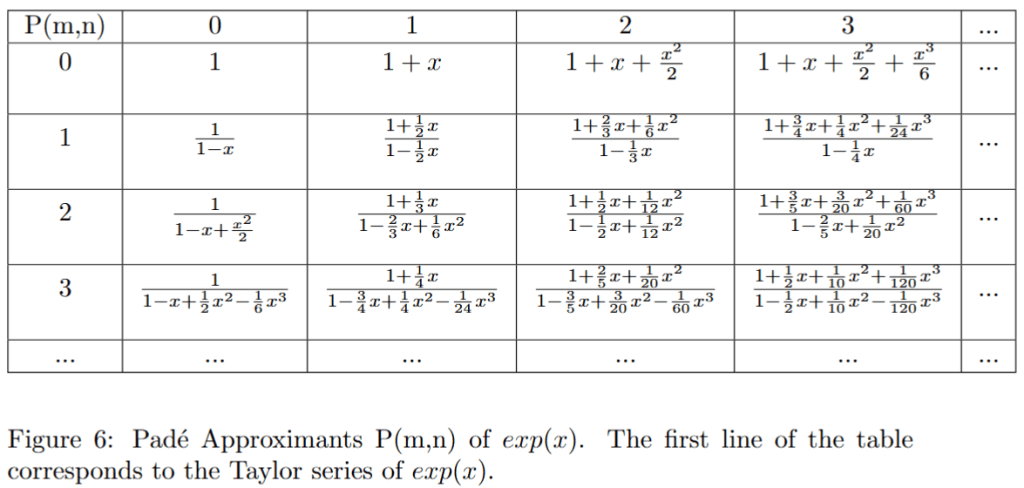

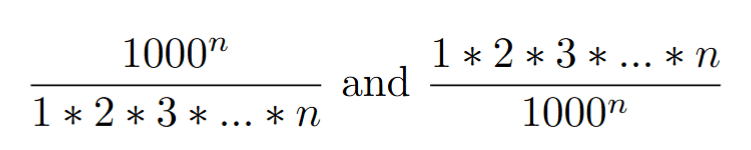

We have seen that Padé approximants offer a more efficient and flexible method for approximating functions than the Taylor expansion. They have been used in many areas of mathematics and physics.

We would like to find a more convenient way to calculate the Padé coefficients of any Taylor or Maclaurin series.

In the following post, we admit the existence of Padé approximants . It is generally possible to find the coefficients of the Padé approximants except in the case of degeneracy due to particular values of the coefficients of the corresponding series. We will not consider these cases in the rest of our study. Remember that we defined Padé approximants as rational functions:

If we want to solve a very hard or even impossible-to-solve-exactly problem using perturbation theory we will end up with a power series like . To convert this (potentially not converging) series to its corresponding Padé approximant we write (as described in the previous post):

From a purely algorithmic point of view and in order to calculate the Padé coefficients we drop :

Comparing each term of the geometric series on the right and the left of this equation (Uniqueness of power series coeffficients) we obtain the following set of equations:

Using for

. We can split this system of equations in two parts and get rid of the x-terms. One part containing the

coefficients (up to i+j = m) and the part without

coefficients. The first system of equation is:

The second system of equations:

Setting without loss of generality the second system of equations becomes:

Changing the order of the coefficients gives:

We can write both systems using matrix notation:

Practically, we start calculations where . The resolution of the second system gives the values of the

coefficients which are injected into the first system to obtain the

coefficient (using

). So if the following determinant (called a ‘Hankel determinant’):

Is not null. i.e.:

the Padé approximant will be unique. The calculations for the Padé approximant coefficients yield a unique solution for any Taylor or Maclaurin series, provided the Hankel determinant is not null, excluding cases of degeneracy due to specific coefficient values.